Micron’s HBM Memory Boosts Nvidia AI Chips and Investor Interest

Tech companies are facing a major memory shortage because AI is evolving faster than ever. Nvidia’s next superchip, “Rubin,” set to launch in late 2026, will require up to 288GB of HBM4 memory. That’s a huge jump from older chips. More memory allows AI to process complex tasks faster, but the sudden surge in demand puts enormous pressure on memory manufacturers. This growing demand has also affected Micron share price, as investors react to the company’s central role in supplying AI memory.

What is HBM?

HBM, or High Bandwidth Memory, is a type of super-fast memory for AI chips. Unlike the memory in your laptop or phone, HBM is stacked in layers and placed very close to the processor. This design allows it to move data quickly and efficiently while using less power. For AI chips like Rubin, HBM is essential because these chips process massive amounts of information at once. Without enough HBM, the AI would slow down, much like trying to pour water through a tiny straw. The more HBM a chip has, the faster it can handle tasks such as training AI models, running simulations, or analyzing huge datasets.

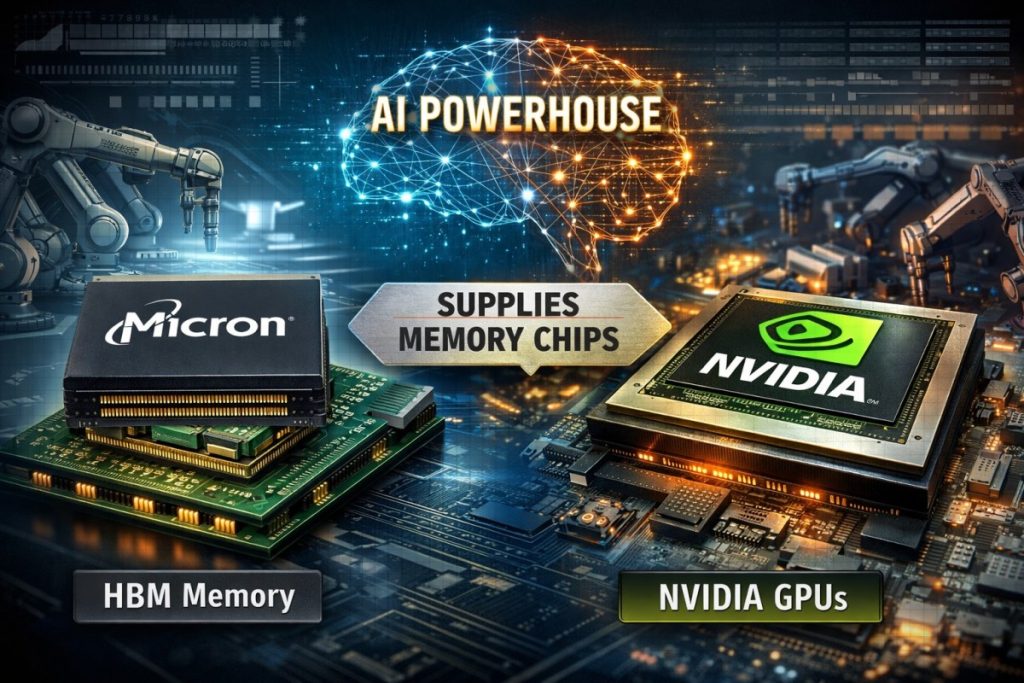

How Micron Supports Nvidia’s AI Chips

In the semiconductor supply chain, Micron is a critical supplier for Nvidia. Nvidia designs the Graphics Processing Units (GPUs) that perform AI calculations, but these GPUs cannot work without massive amounts of high-speed memory. Nvidia uses Micron’s memory chips to power its GPUs, making Micron an essential part of the process. Think of it this way: Nvidia builds the “brain” of the AI chip, and Micron supplies the “fuel” that keeps it running at full speed. Because GPUs rely so heavily on HBM, any delay or shortage in memory production directly slows down AI chip performance. As a result, investors closely watch Micron stock price today and Micron premarket activity to see how the company is responding to rising AI demand.

Why HBM Memory is Hard to Make

Producing HBM is complex. Each bit of HBM uses three times more wafer area than standard memory. This “die penalty” slows production and increases costs. To meet AI demand, Micron has shifted factories from making regular PC and smartphone memory to focus on HBM. As a result, shortages are spreading across other tech products, including laptops, servers, and smartphones. This ripple effect shows that the problem goes beyond AI—almost every modern device depends on memory.

Micron’s Expansion and R&D Investment

To tackle the shortage, Micron is investing 20 billion dollars in 2026 to expand HBM production with new factories and accelerated projects in Boise and Singapore. On top of capacity expansion, Micron recently secured a major Taiwanese government subsidy to support a multi-year R&D project focused on developing advanced HBM technology. This strategic funding reinforces the importance of HBM in the global memory supply chain and supports next-generation memory innovation.

Memory Becomes the “Oxygen” for AI

Memory has moved from a supporting role to being essential for AI chips. Analysts now call it the “oxygen” for AI accelerators. The HBM market is projected to reach 100 billion dollars by 2028, two years earlier than previous estimates. Companies like Micron are no longer just memory suppliers—they are key players in the AI economy, helping shape the pace of innovation worldwide.

A Long-Term Shift in Tech

The memory shortage reflects more than temporary production delays. Growing AI demand, complex manufacturing, and massive capital investments are reshaping the memory market. How quickly companies like Micron scale up HBM production and innovate will influence AI, computing, and consumer electronics for years to come, and investors continue to track Micron share price, Micron stock price today, and Micron premarket activity as a measure of the company’s performance.